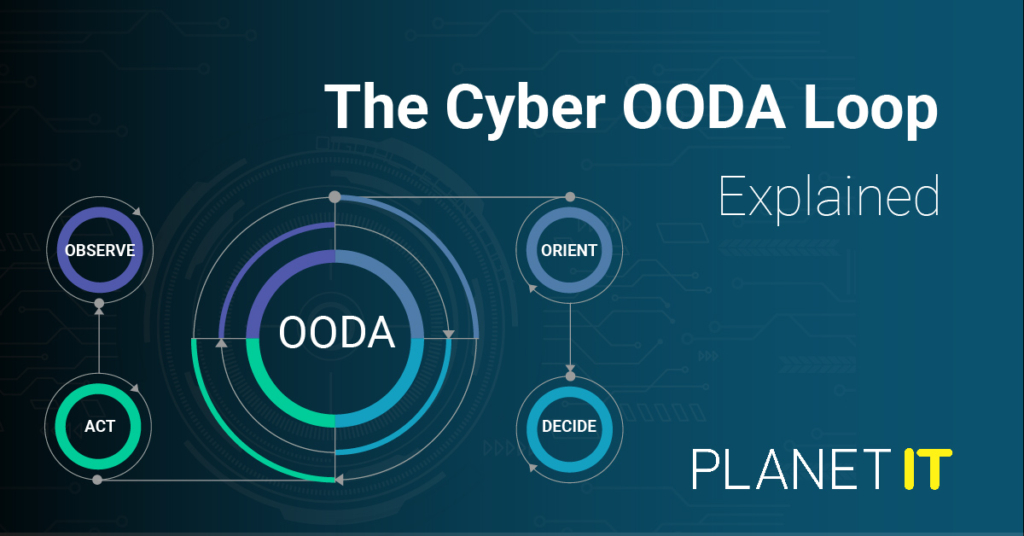

In Shrek’s words (Well, sort of), “Onions have layers. “Cyber Security” has layers… You get it? They both have layers.” He has a point!

You may have heard of the term Defence-In-Depth. The principle is that the more “layers” of security, the better protected it will be from the threat actors who seek to affect your business, damage your workflow and disrupt your profitability. In the cyber security space, we often liken this approach to an onion, and I cannot liken anything to an Onion without seeing Donkey’s face as Shrek explains the principle of having layers!

In this article, we’ll peel back the layers (pun intended) to understand why having multiple security measures is crucial for safeguarding our valuable data. Most importantly, how and why your business, regardless of size, needs to be taking the onion seriously and ensuring you’re not leaving yourself woefully underprepared.

Having worked in the IT and Cyber Security space for over 15 years, I have seen first-hand the devastation, disruption and loss of business caused by a failure to take a layered approach to protection, so much so that I have even had the unfortunate pleasure of seeing well-established companies fold due to their lack of investment in cyber security.

The “defence in depth” strategy emphasises creating multiple layers of security around various components in your IT environment. Let’s explore these layers and understand their significance.

The Onion Approach To Cyber Security

Imagine an onion: it has concentric layers that wrap around its heart. Similarly, our data needs layers of protection. In this scenario, our data, our intellectual property, and our customers are the heart of our onion! However, we should consider the outside layers first, as they are the most vulnerable to the first attack.

People, The Human Layer (AKA The Human Firewall)

In any business, the most significant risk to your data security is always your people. We are all human, we all make mistakes, and therefore, we all need the training to understand how to reduce the risk you pose to the business and how best to protect the system you use every day. I call this the Human Firewall, the largest surface and the easiest to harden and develop. However, this is usually the most underdeveloped across all the businesses you see that have suffered a cyber attack. To build this layer, you should;

Implement strong Security Policies: Educate users about best practices, how the business expects them to interact with the systems and data and what could go wrong if they don’t.

Have strong Business Conduct Guidelines: Promote security awareness by giving the staff all the training to correctly use the system and strong guidelines on what happens when you fail to adhere to the expectations.

End User Training and Test: Test your users every month, train them every six months and don’t always use the same training and testing. You should have strong Phishing training, cyber security and data protection training in place that should involve regular assessments, training and re-evaluation. Don’t allow complacency.

Comply with Local Regulations: Ensure that your staff know the regulations and expectations of your operational locations, be that EU, UK, US or any other regional regulation; now, knowing is not a justification!

Physical Access: Locked Rooms and Restricted Areas

It goes without saying that the physical protection afforded to any office, data centre, server room, or workspace is critical to the implementation of reasonable security standards. It is also critical when we think about how we stop the bad actors from gaining entry to well-digitally protected systems. This is often an area where IT teams pass off the reasonability to facilitate or disregard interest in site management, but this should never be the case.

Secure physical spaces prevent unauthorised entry. You need to ensure that every server room door is locked, that all data centres have restricted access, and that access control mechanisms are deployed around your business with the correct level of entry and authority for all users, roles and responsibilities. This should be paired with CCTV and a valid security system.

Network Security: Fortifying the Digital Perimeter

This usually is where most IT professionals and business owners think cyber security starts and ends. This is simply not true. This is a big part of the puzzle, but at this point, we have already broken through two layers of the onion, and we are dangerously close to risking it all.

You need to consider the breadth of the solutions you choose when it comes to this layer, as we need to cover all points of ingress or lateral movement and not just consider the edge of the network. We will often see people think about the edge too much, forgetting the dissolving edge following the pandemic and moving to remote and hybrid work.

Local Area Networks (LANs): Secure switches, routers, and firewalls; this is the physical network. I would expect to see a robust firewall or SASE solution tied into a single well-respected vendor for switching with your internet provider in most cases offering you a robust router which is secure and outside of your DMZ and the direct risk profile of your business.

Wireless Networks: WIFI, it is all about Implementing strong encryption and access controls. You need to ensure that your WIFI does not allow access to business systems or devices that are not trusted. In this regard, you should use a well-known vendor, have at least user-based authentication, separate SSIDs for staff and guests, and have appropriate ACLs in place backed by your LAN.

Intrusion Prevention Systems (IPS): In most cases, this will sit on your firewall and detect and block suspicious network activity. However, when you move into the medical, pharma or bio-medical space then, you need to consider that you may require IPS internally in your network also to prevent insider lateral spread.

Remote Access Servers: There is always a case where someone needs to gain access to the system for legitimate reasons from outside your business. Implementing a tool like Azure Virtual Desktop or Windows 365 to provide secure and controlled access is critical.

Network Operating Systems (OS): If you want to be protected, you need to keep them updated and hardened. It goes without saying that if you are an ISO 27001, Cyber Essentials or CE Plus certified business, then this should be second nature to you. Once a device loses support from a vendor, this is a risk and must be removed from the system. There is no excuse for running a legacy operation system in 2024; you can use tools to virtualise legacy platforms, isolate them from the network and remove the underlying OS risk.

Voice Security: Protecting Communication Channels

This is often forgotten about. IT professionals on legacy will have passed the phone system to a 3rd party or another team. However, with the integration into tools like Teams, this becomes a thing of the past.

Private Branch Exchange (PBX), Voice Gateways and Voice Mail Services: Secure legacy phone systems by removing them from your core network and placing them on ACL-controlled VLANs with restricted access and locked-down ports. Using a solid network that uses Voice VLANS can go a long way to removing this risk. If your phone provider doesn’t know about this or how to do this, then they are stuck in the past. Security is key. All of this still applies if your phone system is hosted or running on someone else’s physical kit.

Unified Communication: Secure real-time communication with relevant user controls, physical restrictions and tools like conditional access and multi-factor authentication (MFA/2FA). You do not want a bad actor making calls from your platform and tricking your customers into thinking it’s you.

Endpoint Device Security: Covering All Devices

One of the most significant devices you own will be lower risk. Most, if not all, will have a strong Anti-virus and Anti-malware product in place that contains a Zero Trust approach and offers real-time protection. But this goes beyond simply slapping anti-virus products onto your laptops.

Printers, Scanners, Desktops, Laptops, Tablets, and Smartphones – each device needs protection; this should be two-fold. It should be enrolled into an MDM, restricted on the network in terms of its access and then protected by your AV tools and, if you can, protected by a 24/7 Managed Detection and Response service.

Server Security: Safeguarding the Heart of IT

Then we get to the core of it, where your data sits and where the risk is highest. This applies if you are on-premise, in a data centre or in the cloud. You need to manage the risk and ensure that the core functions and protection and that you maintain good heart health!

Operating Systems (OS): Regular patches and security configurations. As I said above, this goes without saying. You need to have the protection in place, and this starts with regular patching. Even a 24/7 business needs to have downtime windows to ensure systems and patches are up to date. If you can’t do this then the architecture of your environment is wrong, and you need to look at role load balancing and expanding your operational system to allow for proper updates and patching.

Applications: You need to know not only what you are running but also who it is from, and when developing internally, use secure coding practices. Applications tend to be the weak link on a server and often are the gateways that threat actors use to enter a system. Having a regular patching cadence and reviewing who you are buying applications from is critical.

Databases: If you are storing data, it should have encryption, access controls, and auditing as a minimum, with the protection that is afforded to the data being as high as it can be without implementing tooling that prevents data access.

Why the Onion Approach Matters

Hardening the Target: By forcing intruders to navigate multiple security controls, we make it harder for them to reach our data. This will prevent them from getting the easy win. The more we can build breadth and depth in our defence, the less risk you have.

Risk Management: Balancing both security and performance is crucial. Too restrictive security affects flexibility, while leniency invites risks. However, no one has stood following a breach and said, “We had enough protection”, so look at the risk profile and really understand if you think you can accept a risk and how likely it is that a threat actor will see that risk as an open door.

Acceptable Risk Level: Evaluate the impact of vulnerabilities and the probability of events. The onion approach helps find the right balance but is not the complete answer. You will need to review, access, develop and grow your business.

In the complex realm of IT security, thinking of cyber security like an onion can guide you. Look to build layer by layer to develop a robust defence strategy and ensure your data remains safe.

So, embrace the onion approach—because cybersecurity is complex, just like Ogres, and at the end of the day, it’s for protecting what matters most.

Remember, security is a journey, not a destination, so keep building those layers!

If you want to talk to one of our experts about how we can help your business secure itself and the benefits the Onion approach could have for you, please call 01235 433900 or email [email protected]. If you want to speak to me directly, you can contact me via DM or at [email protected].

Planet IT

Planet IT